In today’s data-driven world, managing uncertainty in flood risk models is a significant challenge for risk professionals.

The abundance of data and complex modeling processes often lead to polarized attitudes: some users lack trust in model results, while others accept them blindly. This dichotomy can result in under-informed or overly optimistic decision-making, neither of which is conducive to effective risk management.

In this article, we take a look at the inherent uncertainty in flood risk modeling, emphasizing the importance of a balanced, evidence-based approach to interpreting and utilizing model outputs.

Learn more in our panel webinar on ‘Understanding and managing flood risk uncertainty’

Understanding the complexity of flood risk modeling

Flood modeling is inherently uncertain. The complexity involved is huge, underscored by an abundance of subjective judgments and objective data variables that can be injected into the modeling process, each having significant influence over the final results.

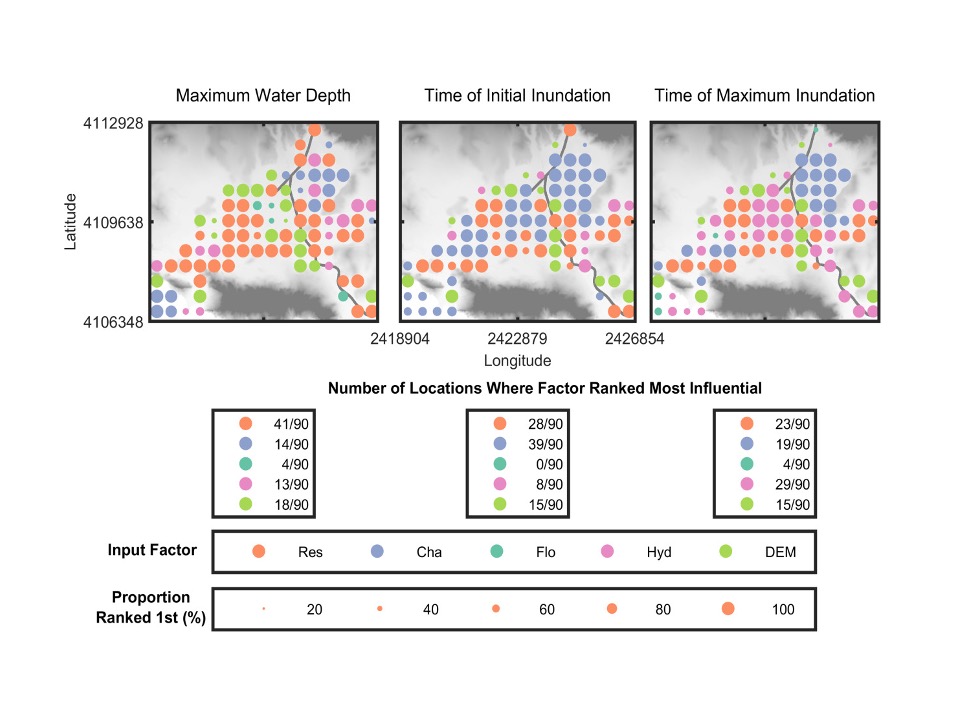

Savage et al. explored model sensitivity to multiple factors including spatial resolutions (grid size, Spa), boundary conditions (input of water onto the grid, Hyd), channel (where the rivers are, Cha) and floodplain parameters (conditions that impact flooding, i.e rainfall, land use, soil type, Flo) and digital elevation models (surface height, DEM).

The research (see figure above) highlighted the varying influence of different factors over time and space, emphasizing the need for transparent communication regarding modeling choices and uncertainties. It found that choices concerning spatial resolution and model parameters significantly impact flood model outputs, underscoring the importance of modelers communicating their choices and uncertainties to users.

Understanding around these variations is very rarely passed down to the end user. This is partly a result of risk professionals having to consider flood as only one piece of a complex physical risk landscape. What we have seen this result in is professionals across various disciplines opting to stick with the same model that they have been using their entire careers. Whilst this might be an easy option in the short term, the reality is that it is probably leading to a gross misrepresentation of risk and its associated uncertainty. Even in circumstances where a legacy model is the de facto for an organization, we recommend considering a multi-model approach to ensure that your primary data is providing realistic results.

Approaching uncertainty: The rise of the realists

In a 2017 article, Marek Shafer called for “the rise of the realists” – a breed of model users that are committed to exploring the influencing variables of their models from the ground up, taking an evidence-based view of the results that they receive.

To become a “realist”, model users should adopt an inquisitive and balanced approach to consuming model results.

Prof Paul Bates’ recent paper into overconfidence in inundation models drew a similar conclusion: If your flood data provider is offering individual asset level inundation data you should be asking them questions. If they’re purporting to do that under future climate scenarios, you should be asking them a lot of questions.

This realist approach not only aids in comprehending the models but also fosters confidence in the data you’re utilizing; promoting a deeper understanding of where data has come from, exactly how robust it is, what scientific approach it’s built upon and where the uncertainty lies. While some uncertainties are more obvious – it is clear that climate data for 2100 will be less reliable than that for 2030 – other aspects require model vendors to transparently communicate their confidence in data accuracy and unveil their model’s workings.

Distinguish model features from the noise with our guide ‘Questions to ask your flood modelers’

Fathom’s approach to uncertainty

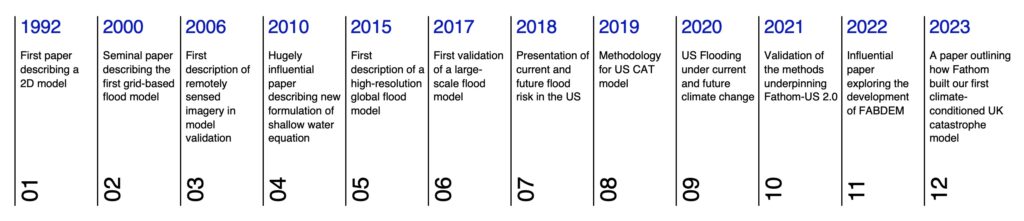

Fathom originated from the Hydrology Research Group at the University of Bristol, one of the leading centers for hydrology in the world, 10 years ago. The last decade has seen significant advancements in our ability to represent the real world dynamics of flood risk in a virtual environment.

Our primary goal at Fathom has always been to build large-scale maps in areas that have traditionally been hard to model. This ambitious aim has been enabled by our commitment to research and our continued academic heritage.

Fathom’s research timeline showcases notable publications that go as far back as Professor Paul Bates’ foundational work on raster-based models in 1992. Our academic heritage predates Fathom’s formation and showcases our organization’s continued commitment to publishing research as it grows. In 2023, we directly published six peer-reviewed papers, collaborating extensively with external academic partners worldwide. You can find the papers here.

Our approach lies in subjecting our data and methods to the scrutiny of peer review to ensure robustness and credibility. However, Fathom’s commitment to opening up our methodology and communicating uncertainty does not stop there. As we have grown, and our internal capacity has increased, our team has worked hard to deliver tools that aid the understanding of our models and the residual uncertainty, such as:

Fathom’s Metadata

Assess how uncertainties affect confidence in model predictions.

Last year, Fathom released Metadata – an additional layer of information that provides, for the first time, a digestible evaluation of uncertainties in our products.

Fathom’s Metadata is available in two forms:

- Certainty Ranks – a quantitative ranking of our certainty in our flood map data (1 to 10; 1 = best (least uncertainty), 10 = worst (most uncertainty).

- Terrain Metadata – information about our terrain data including instrument type, underlying resolution and vintage.

These new features facilitate a much more holistic assessment of model uncertainties to better inform decision support and deliver a level of granularity that has not been seen in the industry before.

Fathom’s Risk Scores

Powerful flood risk metrics for any global location, under any climate scenario

Risk Scores consist of two data layers Relative Risk and Risk Category. Their aim is to provide simple, yet powerful tools for risk professionals to quantify and determine flood risk for any given location globally.

To account for uncertainty, Fathom’s Risk Category not only considers locations within the extreme flood outline (1-in-1000 year flood) but also locations outside but proximate to the extreme flood zone (proximate in both distance from the floodplain and elevation above the flood level). This categorization process provides a simplified yet powerful representation of flood risk that harnesses the intelligence of Fathom’s flood maps to highlight hotspot areas of flood risk as well as encompass uncertainties in flood modeling.

Intested in Risk Scores? Learn more in our recent product briefing.

Uncertainty in future climate modeling

A multi-ensemble approach to future climate scenarios

Fathom’s Climate Dynamics framework explicitly incorporates an ensemble of climate models to represent uncertainty in various global warming levels and emission scenarios.

This approach ensures that we are not just using one static representation of the future but accounting for a range of different percentiles within our risk scores and flood depth calculations. By doing this, our Climate Dynamics framework enables users to toggle between different pathways and scenarios to explore the impact of any plausible future on flood risk.

Understanding uncertainty in your data

When modeling flood risk or working with their outputs, acknowledging and managing uncertainty is paramount. Fathom’s approach to uncertainty sets a benchmark for the industry and outlines the importance of transparent and research-driven solutions. By embracing a realistic perspective, challenging vendors, and adopting advanced tools, professionals can navigate the complex landscape of flood risk modeling effectively, ensuring informed decision-making and robust risk mitigation strategies.